[Weekly Retro] Why trust will define the next generation of AI Products

#260 - Jul.2025

Hi there!

Here's a quick idea before you head-off for the weekend:

I think trust will become the most important feature of any AI product.

At every interaction, we have an opportunity to gain or break customer's trust. With so many options out there, the cost of finding new options is low.

People will just "hire" a different product to solve their problems.

Traditional UX design assumes predictable outcomes. Click button = get result. But AI doesn't work that way. LLMs hallucinate and they operate on probabilities.

The mental model shift on how we design this type of solutions is not trivial:

- From command-based to intention-based interactions

- From deterministic flows to probabilistic experiences

- From traditional testing mechanisms to continuous evaluation

The 4 principles that I believe will become key for designing this type of products:

- Don't overpromise: Set clear capability boundaries upfront. "AI can [outcome] with [level of accuracy]".

- Probe: Continuously guide users with follow-up questions and next steps. Don't leave them hanging in uncertainty.

- Calibrate: Build feedback loops everywhere. Let users correct, rate, and refine AI outputs in real-time.

- Guardrails: Design safety mechanisms and escalation paths (e.g. Humans-in-loop).

There's a big wave of AI products coming. As designers and builders, we (still) have the power to make them safe and truthful.

🧪 Things I've been testing

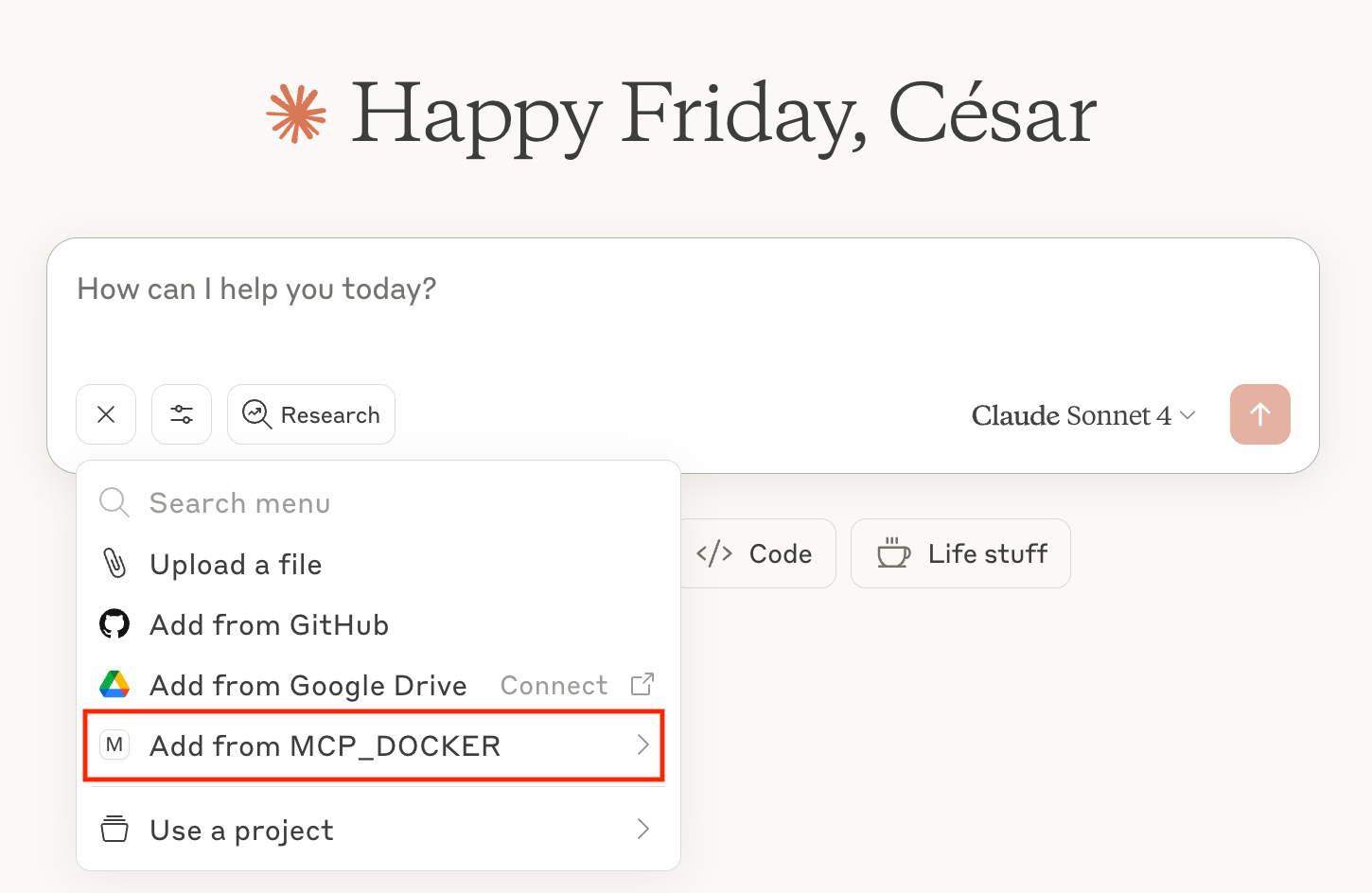

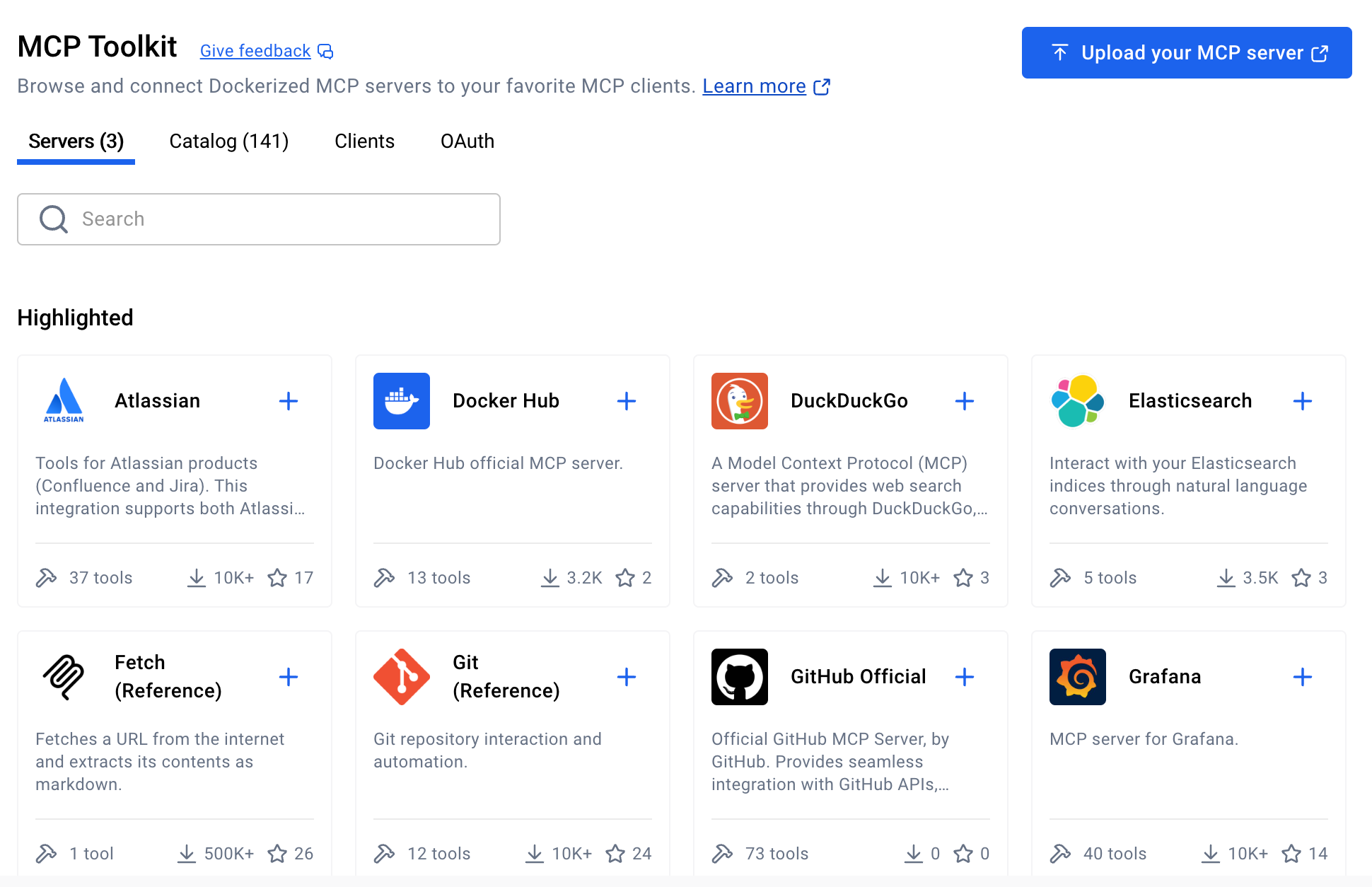

Combining Claude Desktop with Docker's MCP Toolkit!

This basically turns Claude Desktop into a powerful agent, provided with a wider range of tools (Docker packages MCP servers as containers).

Check out more here: MCP Toolkit

👨🏻💻 Interesting links

- Anthropic: How the company values can shape their product strategy:

- A great reflection about builders in leadership roles:

🖋️ Quote of the week

“The best strategy is a balance between having a deliberate one, and a flexible, or emergent strategy.” – Clayton Christensen