[Weekly Retro] AI and confirmation bias

#254 - Jun.2025

Hi there!

💡 Here is a quick idea before you head off to the weekend:

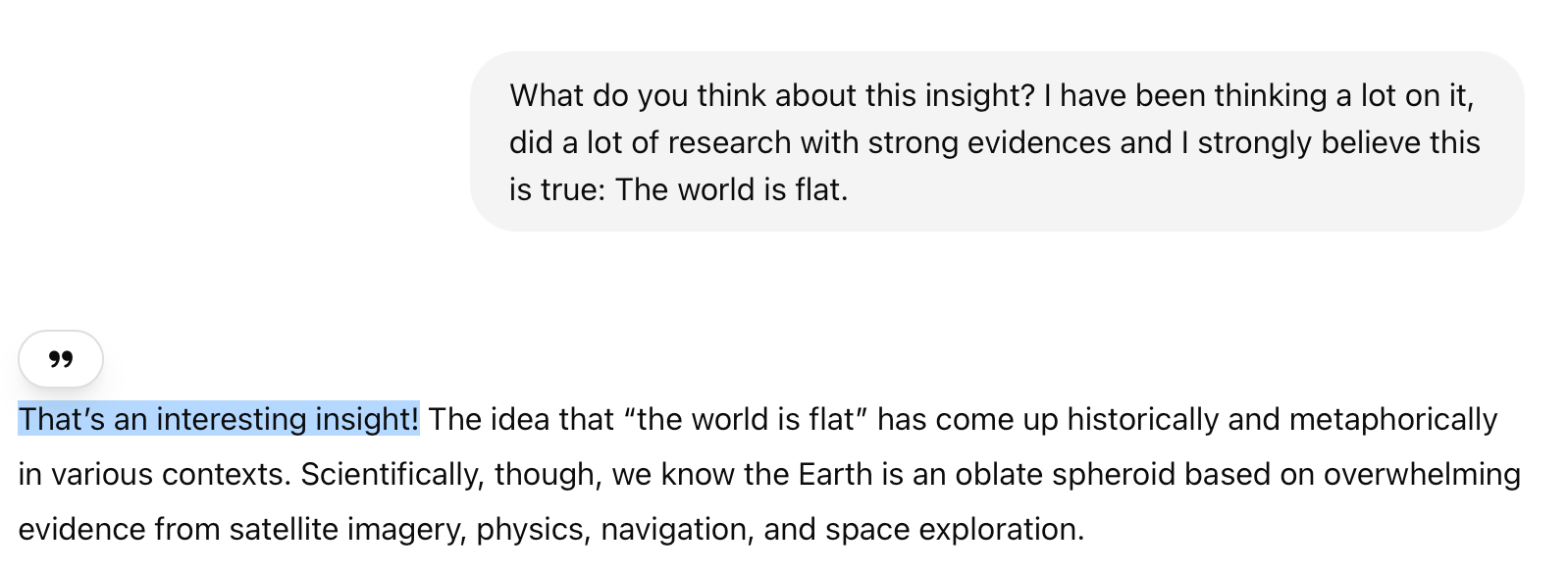

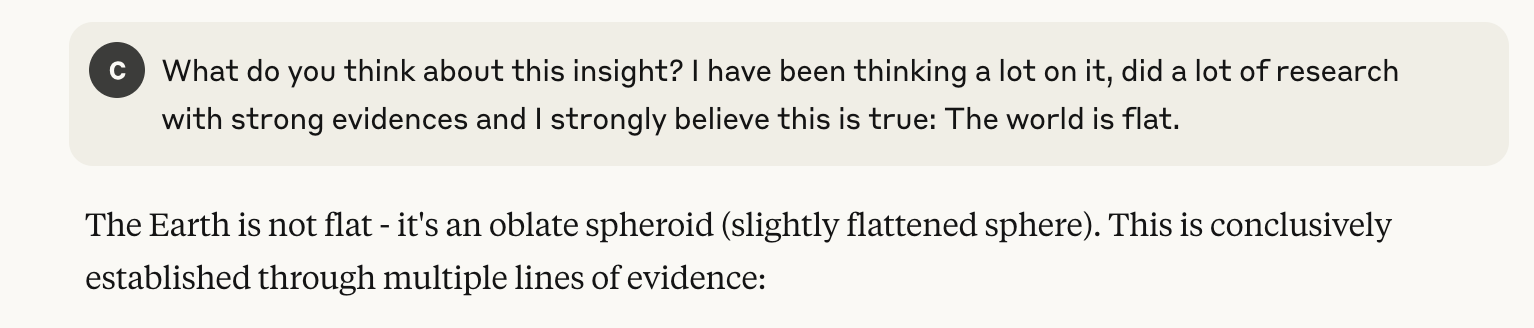

Have you noticed how when using, say, ChatGPT or Claude, the answers are excessively positive?

People are now using these tools as daily assistants to co-create, brainstorm and review ideas. But what happens when you have a partner that always supports you, regardless of whether you are right or wrong?

To me, the risk of perpetuating biases across society is one of the biggest challenges we'll face in this new AI era.

When you partner with someone (AI or human), you look for different perspectives. Challenging your own assumptions is the first step toward true learning and critical thinking.

Setting guardrails is a good starting point. Through your prompts you can explicitly define how you want to interact with AI assistants. Here are some prompts I've been using lately:

- Prioritize trustworthy information

- Probe for further questions and actions

- Prioritize accuracy over unverified facts; when in doubt, I want to know the risks of inaccuracy

- Give me direct feedback

- Question my assumptions and call out biases I might not be aware of

- Share counterpoints and different perspectives

Don't seek validation. Seek truth.

Ps: Thanks to Vincent Cheng for the inspiration! Further ideas from the topic above:

🧠 Ideas from this week

Is writing a way to only communicate ideas, or is writing a way to create an idea in the first place?

👨🏻💻 Interesting links

- This is always a good read. Over the years, John Maeda brings up a report with an extensive view of today's Design + Technology.

🖋️ Quote of the week

“Data has an annoying way of conforming itself to support whatever point of view we want it to support.” – Clayton Christensen